CUB – a configurable C++ template library of high-performance CUDA primitives

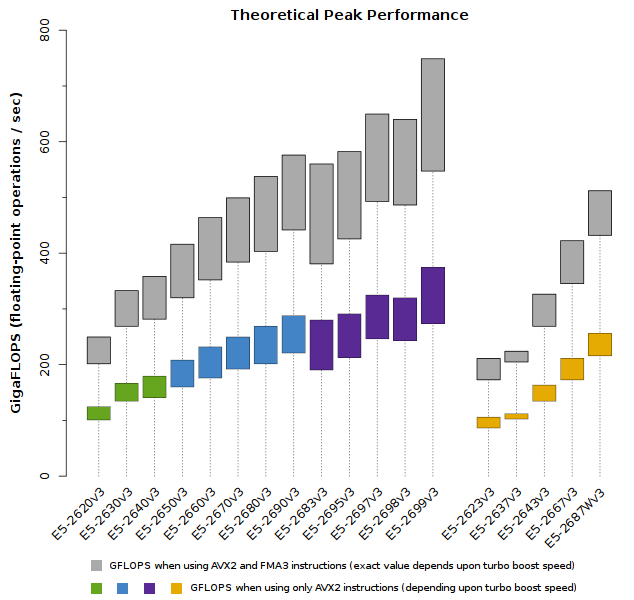

Each new generation of NVIDIA GPUs brings with it a dramatic increase in compute power and the pace of development over the past several years has been rapid. The Tesla M2090, based on the Fermi GF110 architecture anounced in 2010, offered global memory bandwidth of up to 177 Gigabytes per second and peak double-precision floating-point performance of 665 Gigaflops. By comparison, today’s Tesla K40 (Kepler GK110b architecture) has peak memory bandwidth of 288 Gigabytes per second and provides reported peak double-precision performance of over 1.4 Teraflops. However, the K40’s reign as the most advanced GPGPU hardware is coming to an end, and Kepler will shortly be superseded by Maxwell-class cards.

Actually achieving optimal performance on diverse GPU architectures can be challenging, since it relies on the implementation of carefully-crafted kernels that incorporate extensive knowledge of the underlying hardware and which take full advantage of relevant features of the CUDA programming model. This places a considerable burden on the CUDA developer seeking to port her application to a new generation of GPUs or looking to ensure performance across a range of architectures.

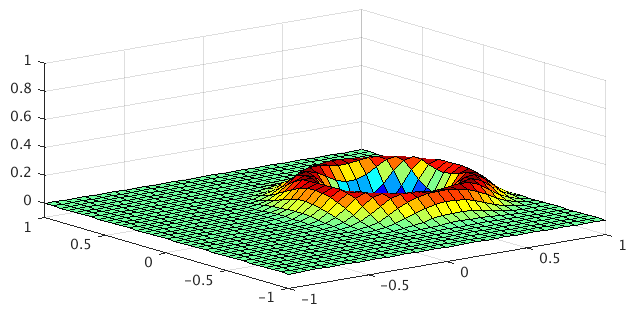

Fortunately, many CUDA applications are formulated in terms of a small set of primitives, such as parallel reduce, scan, or sort. Before attempting to handcraft these primitive operations ourselves, we should consider using one of the libraries of optimized primitives available to CUDA developers. Such libraries include Thrust and CUDPP, but in this post, we will focus on the CUB library developed by Duane Merrill of NVIDIA Research. CUB – the name derives from “CUDA Unbound” – provides generic high-performance primitives targeting multiple levels of application development. For example, CUB supports a set of device-wide primitives, which are called from the host, and in this regard, the functionality provided by CUB overlaps with Thrust to some degree. However, unlike Thrust, CUB also provides a set of kernel components that operate at the thread-block and thread-warp levels.

Continue reading →