NVIDIA DGX Platform

NVIDIA DGX Deployments for Scale Out AI

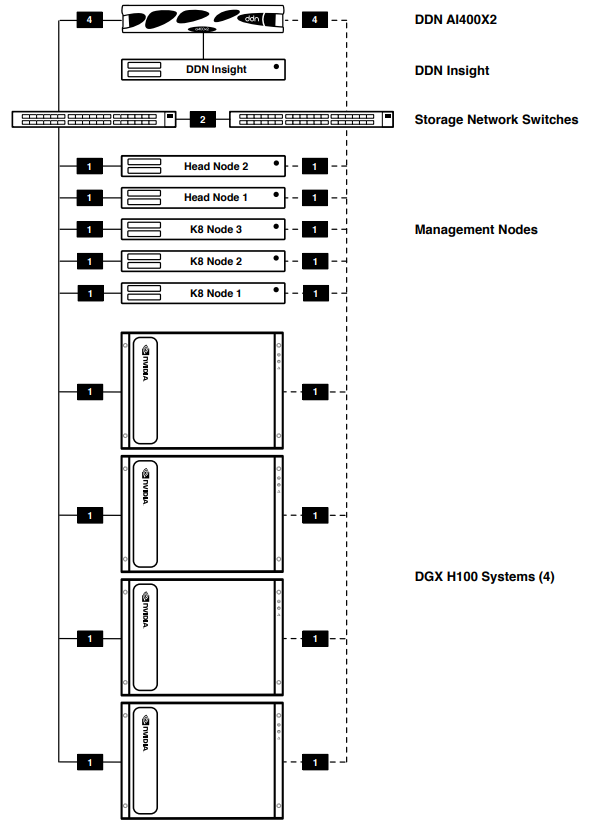

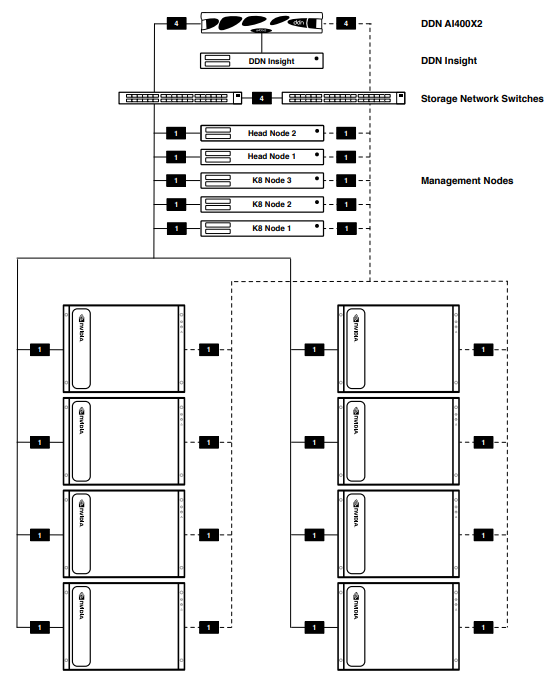

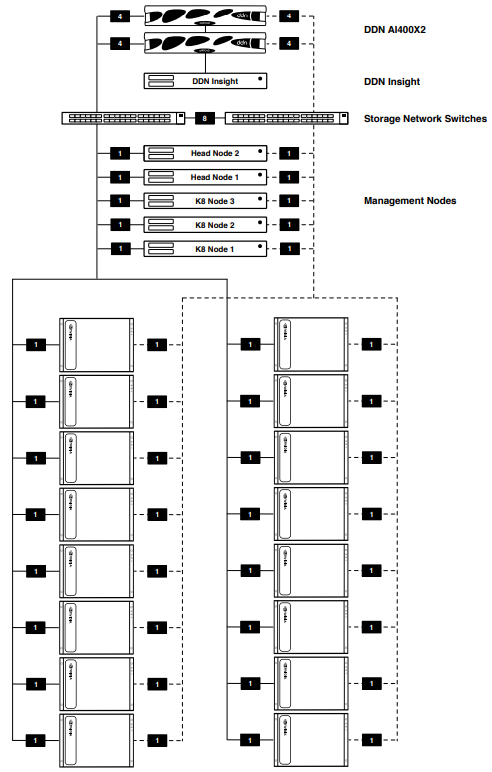

NVIDIA DGX POD and DGX BasePOD solutions provide the underlying infrastructure (compute, networking, and storage) + software to accelerate the rapid deployment and execution of AI workloads. DGX POD deployments also include the AI data-plane/storage that can keep up with these workloads: with the capacity for large training datasets, expandability for growth, and the high storage throughput required for scale-out AI.

DDN A3I + DGX BasePOD Digital Whitepaper

Dive deep into the architecture and performance of a DGX BasePOD configuration with DDN A3I storage. Read detailed performance and throughput metrics.