I’ve had several customers comment to me that it’s difficult to find someone that can speak with them intelligently about PCI-E root complex questions. And yet, it’s of vital importance when considering multi-CPU systems that have various PCI-Express devices (most often GPUs or coprocessors).

First, please feel free to contact one of Microway’s experts. We’d be happy to work with you on your project to ensure your design will function correctly (both in theory and in practice). We also diagram most GPU platforms we sell, as well as explain their advantages, in our GPU Solutions Guide.

It is tempting to just look at the number of PCI-Express slots in the systems you’re evaluating and assume they’re all the same. Unfortunately, it’s not so simple, because each CPU only has a certain amount of bandwidth available. Additionally, certain high-performance features – such as NVIDIA’s GPU Direct technology – require that all components be attached to the same PCI-Express root complex. Servers and workstations with multiple processors have multiple PCI-Express root complexes. We dive deeply into these issues in our post about Common PCI-Express Myths.

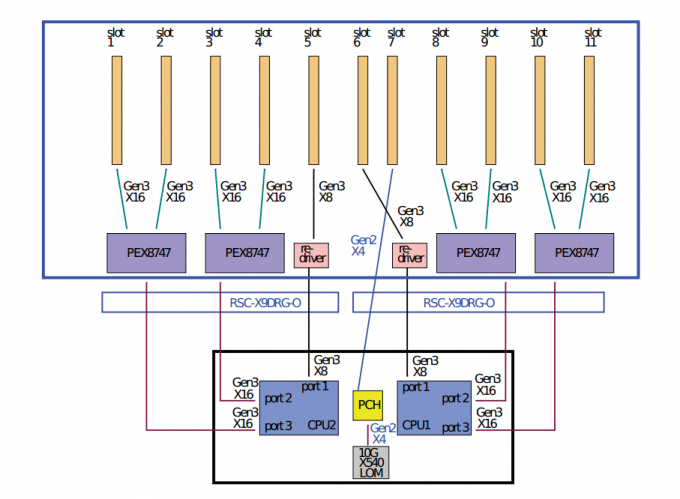

To illustrate, let’s look at the PCI-Express design of Microway’s latest 8-GPU Octoputer server:

It’s a bit difficult to parse, but the important points are:

- Two CPUs are shown in blue at the bottom of the diagram. Each CPU contains one PCI-Express tree.

- Each CPU provides 32 lanes of PCI-Express generation 3.0 (split as two x16 connections).

- PCI-Express switches (the purple boxes labeled PEX8747) further expand each CPU’s tree out to four x16 PCI-Express gen 3.0 slots.

- The remaining 8 lanes of PCI-E from each CPU (along with 4 lanes from the Southbridge chipset) provide connections for the remaining PCI-E slots. Although these slots are not compatible with accelerator cards, they are excellent for networking and/or storage cards.

Having one additional x8 slot on each CPU allows for the accelerators to communication directly with storage or high-speed networks without leaving the PCI-E root complex. For technologies such as GPU Direct, this means rapid RDMA transfers between the GPUs and the network (which can significantly improve performance).

In total, you end up with eight x16 slots and two x8 slots evenly divided between two PCI-Express root complexes. The final x4 slot can be used for low-end devices.

While the layout above may not be ideal for all projects, it performs well for many applications. We have a variety of other options available (including large amounts of devices on a single PCI-E root complex). We’d be happy to discuss further with you.