The phrase “High Performance Computing” gets thrown around a lot, but HPC means different things to different people. Every group with compute needs has its own requirements and workflow. There are many groups that would never need 100+ TFLOPS compute and 1+ PB storage, but some need more! If your current system just isn’t sufficient, keep reading to learn what type of HPC might fit your needs.

Workstations and Servers: Closer Than Ever

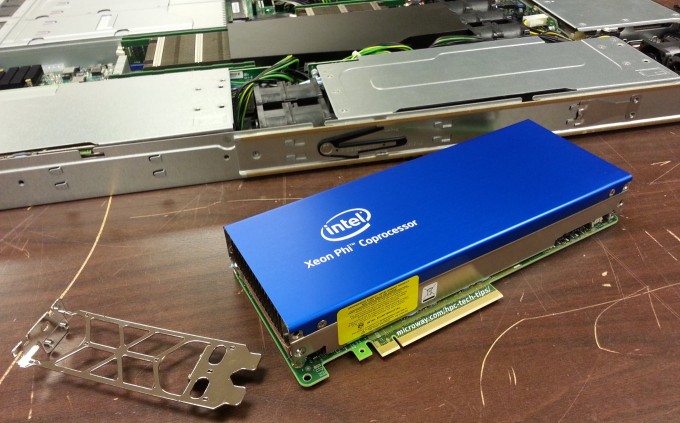

If you don’t take into account their physical appearance, the distinction between rackmount servers and desktop workstations has become very blurred. It’s possible to fit 32 processor cores and 512GB of memory or four x16 gen 3.0 double-width GPUs within a workstation. There are, of course, tradeoffs when comparing high-end workstations with rackmount servers, but you may be surprised how much you can achieve without jumping to a traditional HPC cluster.

Continue reading