The 2nd Generation AMD EPYC “Rome” CPUs are here! Rome brings greater core counts, faster memory, and PCI-E Gen4 all to deliver what really matters: up to a 2X increase in HPC application performance. We’re excited to present our thoughts on this advancement, and the return of x86 server CPU competition, in our detailed AMD EPYC Rome review. AMD is unquestionably back to compete for the performance crown in HPC.

2nd Generation AMD EPYC “Rome” CPUs are offered in 8-64 cores and clock speeds from 2.2-3.2Ghz. They are available in dual socket as well as aselect number of single socket only SKUs.

Important changes in AMD EPYC “Rome” CPUs include:

- Up to 64 cores, 2X the max in the previous generation for a massive advancement in aggregate throughput

- PCI-E Gen 4 support for 2X the I/O bandwidth of the x86 competition— in a first for an x86 server CPU

- 2X the FLOPS per core of the previous generation EPYC CPUs with the new Zen2 architecture

- DDR4-3200 support for improved memory bandwidth across 8 channels, reaching up to 208GB/sec per socket

- Next Generation Infinity Fabric with higher bandwidth for intra and inter-die connection, with roots in PCI-E Gen4

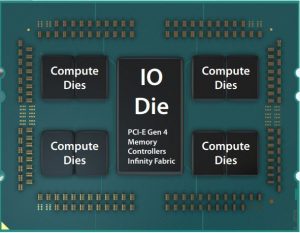

- New 14nm + 7nm chiplet architecture that separates the 14nm IO and 7nm compute core dies to yield the performance per watt benefits of the new TSMC 7nm process node

Leadership HPC Performance

There’s no other way to say it: the 2nd Generation AMD EPYC “Rome” CPUs (EPYC 7xx2) break new ground for HPC performance. In our experience, we haven’t seen this type of advancement in CPU performance in many years or without exotic architectural changes. This leap applies across floating point and integer applications.

Note: This article focuses on SPEC benchmark performance (which is rooted in real integer and floating point applications). If you’re hunting for a more raw FLOPS/dollar calculation, please visit our Knowledge Center Article on AMD EPYC 7xx2 “Rome” CPUs.

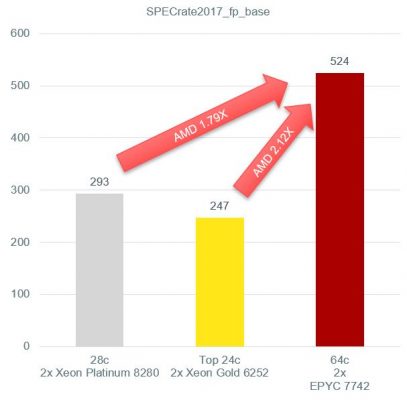

Floating Point Benchmark Performance

In short: at the top bin, you may see up to 2.12X the performance of the competition. This is compared to the top bin of Xeon Gold Processor (Xeon Gold 6252) on SPECrate2017_fp_base.

Compared to the top Xeon Platinum 8200 series SKU (Xeon Platinum 8280), up to 1.79X the performance.

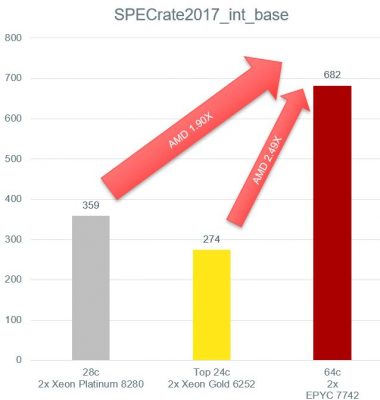

Integer Benchmark Performance

Integer performance largely mirrors the same story. At the top bin, you may see up to 2.49X the performance of the competition. This is compared to the top bin of Xeon Gold Processor (Xeon Gold 6252) on SPECrate2017_int_base.

Compared to the top Xeon Platinum 8200 series SKU (Xeon Platinum 8280), up to 1.90X the performance.

What Makes EPYC 7xx2 Series Perform Strongly?

Contributions towards this leap in performance come from a combination of:

- The 2X the FLOPS per core available in the new architecture

- Improved performance of Zen2 microarchitecture

- Moderate increases in clock speeds

- Most importantly dramatic increases in core count

These last 2 items are facilitated by the new 7nm process node and the chiplet architecture of EPYC. Couple that with the advantages in memory bandwidth, and you have a recipe for HPC performance.

Performance Outlook

The dramatic increase in core count coupled with Zen2 means that we predict that most of the 32 core models and above, about half AMD’s SKU stack, is likely to outperform the top Xeon Platinum 8200 series SKU. Stay tuned for the SPEC benchmarks that confirm this assertion.

If you’re comparing against more modest Xeon Gold 62xx or Silver 52xx/42xx SKUs, we predict even an even more dramatic performance uplift. This is the first time in many years we’ve seen such an incredibly competitive product from the AMD Server Group.

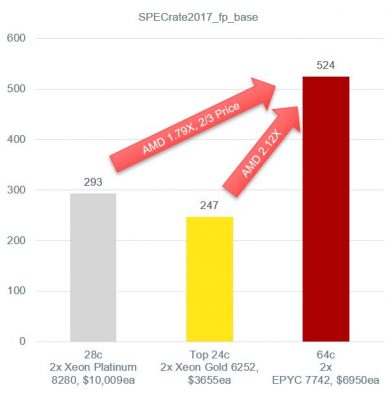

Class Leading Price/Performance

AMD EPYC 7xx2 series isn’t just impressive from an absolute performance perspective. It’s also a price performance machine.

Examine these same two top-bin SKUs once again:

The top-bin AMD SKU does 1.79X the floating point work at approximately 2/3 the price of Xeon Platinum 8280. It delivers 2.13X the floating point performance to the Xeon Gold 6252 for about similar price/performance.

Should you be willing to accept more modest core counts with the lower cost SKUS, these comparisons just get better.

Finally, if you’re looking to roughly match or exceed the performance of the top-bin Xeon Gold 6252 SKU, we predict you’ll be able to do so with the 24-core EPYC 7352. This will be at just over 1/3 the price of the Xeon socket.

This much more typical comparison is emblematic of the price-performance advantage AMD has delivered in the new generation of CPUs. Stay tuned for more benchmark results and charts to support the prediction.

A Few Caveats: Performance Tuning & Out of the Box

Application Performance Engineers have spent years optimizing applications for the most widely available x86 server CPU. For a number of years now, that has meant Intel’s Xeon processors. The benchmarks presented here represent performance-tuned results.

We don’t yet have great data on how easy it is to achieve optimized performance with these new AMD “Rome” CPUs yet. For those of us in HPC for some time, we know out of the box performance and optimized performance often can mean very different things.

AMD does recommend specific compilers (AOCC, GCC, LLVM) and libraries (BLIS over BLAS and FLAME over LAPACK) to achieve optimized results with all EPYC CPUs. We don’t yet have a complete understanding how much these help end users achieve these superior results. Does it require a lot of tuning for the most exceptional performance?

AMD however has released a new Compiler Options Quick Reference Guide for the new CPUs. We strongly recommend using these flags and options for tuning your application.

Chiplet and Multi-Die Architecture: IO and Compute Dies

One of the chief innovations in the 2nd Generation AMD EPYC CPUs is in the evolution of the multi-die architecture pioneered in the first EPYC CPUs.

Rather than create one, monolithic, hard to yield die, AMD has opted to lash together “chiplets” together in a single socket with Infinity Fabric technology.

Compute Dies (now in 7nm)

8 compute chiplets (formally, Core Complex Dies or CCDs) are brought together to create a single socket. These CCDs take advantage of the latest 7nm TSMC process node. By using 7nm for the compute cores in 2nd Generation EPYC, AMD takes advantage of the space and power efficiencies of the latest process—without the yield issues of single monolithic die.

What does it mean for you? More cores than anticipated in a single socket, a reasonable power efficiency for the core count, and a less costly CPU.

The 14nm IO Die

In 2nd Generation EPYC CPUs, AMD has gone a step further with the chiplet architecture. These chiplets are now complemented by an separate I/O die. The IO Die contains the memory controllers, PCI-Express controllers, and Infinity Fabric connection to the remote socket.Also, this resolves any NUMA affinity quirks of the 1st generation EPYC Processors.

Moreover, the I/O die is created in the established 14nm node process. It’s less important that it utilize the same 7nm power efficiencies.

DDR4-3200 and Improved Memory Bandwidth

AMD EPYC 7xx2 series improves its theoretical memory bandwidth when compared to both its predecessor and the competition.

DDR4-3200 DIMMs are supported, and they are clocked 20% faster than DDR4-2666 and 9% faster than DDR4-2933.

In summary, the platform offers:

- Compared to Cascade Lake-SP (Xeon Platinum/Gold 82xx, 62xx): Up to a 45% improvement in memory bandwidth

- Compared to Skylake-SP (Xeon Platinum/Gold 81xx, 61xx): Up to a 60% improvement in memory bandwidth

- Compared to AMD EPYC 7xx1 Series (Naples): Up to a 20% improvement in memory bandwidth

These comparisons are created for a system where only the first DIMM per channel is populated. Part of this memory bandwidth advantage is derived from the increase in DIMM speeds (DDR4-3200 vs 2933/2666); part of it is derived from EPYC’s 8 memory channels (vs 6 on Xeon Skylake/Cascade Lake-SP).

While we’ve yet to see final STREAM testing numbers for the new CPUs, we do anticipate them largely reflecting the changes in theoretical memory bandwidth.

PCI-E Gen4 Support: 2X the I/O bandwidth

EPYC “Rome” CPUs have an integrated PCI-E generation 4.0 controller on the I/O die. Each PCI-E lane doubles in maximum theoretical bandwidth to 4GB/sec (bidirectional).

A 16 lane connection (PCI-E x16 4.0 slot) can now deliver up to 64GB/sec of bidirectional bandwidth (32GB/uni). That’s 2X the bandwidth compared to first generation EPYC and the x86 competition.

Broadening Support for High Bandwidth I/O Devices

The new support allows for higher bandwidth connection to InfiniBand and other fabric adapters, storage adapters, NVMe SSDs, and in the future GPU Accelerators and FPGAs.

Some of these devices, like Mellanox ConnectX-6 200Gb HDR InfiniBand adapters, were unable to realize their maximum bandwidth in a PCI-E Gen3 x16 slot. Their performance should improve in PCI-E Gen4 x16 slot with 2nd Generation AMD EPYC Processors.

2nd Generation AMD EPYC “Rome” is the only x86 server CPU with PCI-E Gen4 support at its launch in 3Q 2019. However, we have seen PCI-E Gen4 support before in the POWER9 platform.

System Support for PCI-E Gen4

Unlike in the previous generation AMD EPYC “Naples” CPUs, there is not strong affinity of PCI-E lanes to a particular chiplet inside the processor. In Rome, all I/O traffic routes through the I/O die and all chiplets reach PCI-E devices through this die.

In order to support PCI-E Gen4, server and motherboard manufacturers are producing brand new versions of their platforms. Not every Rome-ready platform supports Gen4, so if this is a requirement be sure to specify this to your hardware vendor. Our team can help you select a server with full Gen4 capability.

Infinity Fabric

Deeply interrelated with PCI-Express Gen4, AMD has also improved the Infinity Fabric Link between chiplets and sockets with the new generation of EPYC CPUs.

Deeply interrelated with PCI-Express Gen4, AMD has also improved the Infinity Fabric Link between chiplets and sockets with the new generation of EPYC CPUs.

AMD’s Infinity Fabric has many commonalities with PCI-Express used to connect I/O devices. With 2nd Generation AMD EPYC “Rome” CPUs, the link speed of Infinity Fabric has doubled. This allows for higher bandwidth communication between dies on the same socket and to dies on remote sockets.

The result should be improved application performance for NUMA-aware and especially non- NUMA-aware applications. The increased bandwidth should help hide any transport bandwidth issues to I/O devices on a remote socket as well. The overall result is “smoother” performance when applications scale across multiple chiplets and sockets.

SKUs and Strategies to Consider for HPC Clusters

Here are the complete list of SKUs and 1KU (1000 unit) prices (Source: AMD). Please note that these costs are those for CPUs sold to channel integrators, not those for fully integrated systems with these CPUs.

Dual Socket SKUs

| SKU | Cores | Base Clock | Boost Clock | L3 Cache | TDP | Price |

|---|---|---|---|---|---|---|

| 7742 | 64 | 2.25 | 3.4 | 256MB | 225W | $6950 |

| 7702 | 2.0 | 3.35 | 200W | $6450 | ||

| 7642 | 48 | 2.3 | 3.3 | 225W | $4775 | |

| 7552 | 2.2 | 3.3 | 192MB | 200W | $4025 | |

| 7542 | 32 | 2.9 | 3.4 | 128MB | 225W | $3400 |

| 7502 | 2.5 | 3.35 | 180W | $2600 | ||

| 7452 | 2.35 | 3.35 | 155W | $2025 | ||

| 7402 | 24 | 2.8 | 3.35 | 128MB | 180W | $1783 |

| 7352 | 2.3 | 3.2 | 155W | $1350 | ||

| 7302 | 16 | 3.0 | 3.3 | 128MB | $978 | |

| 7282 | 2.8 | 3.2 | 64MB | 120W | $650 | |

| 7272 | 12 | 2.9 | 3.2 | $625 | ||

| 7262 | 8 | 3.2 | 3.4 | 128MB | 155W | $575 |

| 7252 | 3.2 | 3.4 | 64MB | 120W | $475 |

EPYC 7742 or 7702 (64c): Select a High-End SKU, yield up to 2X the performance

Assuming your application scales with core count and maximum performance at a premium cost fits with your budget, you can’t beat the top 64core EPYC 7742 or 7702 SKUs. These will deliver greater throughput on a wide variety of multi-threaded applications.

Anything above EPYC 7452 (32c, 48c): Select a Mid-High Level SKU, reach new performance heights

While these SKUs aren’t inexpensive, they take application performance to new heights and break new benchmark ground. You can take advantage of that performance advantage for your application if it’s multi-threaded. From a price/performance perspective, these SKUs may also be attractive.

EPYC 7452 (32c): Select a Mid Level SKU, improve price performance vs previous generation EPYC

Previous generation AMD EPYC 7xx1 Series CPUs also featured 32 cores. However, the 32 core entrant in the new 7xx2 stack is far less costly than the prior generation while delivering greater memory bandwidth and 2X the FLOPS per core.

EPYC 7452 (32c): Select a Mid Level SKU, match top Xeon Gold and Platinum with far better price/performance

If you’re optimizing for price/performance compared to the top Intel Xeon Platinum 8200 or Xeon Gold 6200 series SKUs, consider this SKU or ones near it. We predict this to be at or near the price/performance sweet-spot for the new platform.

EPYC 7402 (24c): Select a Mid Level SKU, come close to top Xeon Gold and Platinum SKUs

The higher clock speed of this SKU also means it is well suited to some applications.

EPYC 7272-7402 (12, 16 24c):Select an affordable SKU, yield better performance and price performance

Treat these SKUs as much more affordable alternatives to most Xeon Gold or Silver CPUs. We’ll await further benchmarks to see exactly where the further sweet-spots are compared to these SKUs. They also compare favorably from a price/performance standpoint to prior generation 1st Generation EPYC 7xx1 processors with 12, 16, or 24 cores. Same performance, fewer dollars!

Single Socket Performance

As with the previous generation, AMD is heavily promoting the concept of replacing dual socket Intel Xeon servers with single sockets of 2nd Generation AMD EPYC “Rome.” They are producing discounted “P” SKUs with only single socket platform support at reduced prices to help further boost the price-performance advantage of these systems.

Single Socket SKUs

| SKU | Cores | Base Clock | Boost Clock | L3 Cache | TDP | Price |

|---|---|---|---|---|---|---|

| 7702P | 64 | 2.0 | 3.35 | 256MB | 200W | $4425 |

| 7502P | 32 | 2.5 | 3.35 | 128MB | 180W | $2300 |

| 7402P | 24 | 2.8 | 3.35 | $1250 | ||

| 7302P | 16 | 3.0 | 3.3 | 155W | $825 | |

| 7232P | 8 | 3.1 | 3.2 | 32MB | 120W | $450 |

Due to the boosted capability of the new CPUs, a single socket configuration my be increasingly viable comparison to a dual socket Xeon platform for many workloads.

Next Steps: get started today!

Read More

If you’d like to read more speeds and feeds about these new processors, check out our article with detailed specifications of the 2nd Gen AMD EPYC “Rome” CPUs. We summarize and compare the specifications of each model, and provide guidance over and beyond what you’ve seen here.

Try 2nd Gen AMD EPYC CPUs for Yourself

Groups which prefer to verify performance before making a design are encouraged to sign up for a Test Drive, which will provide you with access to bare-metal hardware with AMD EPYC CPUs, large-memory, and more.

Browse Our Navion AMD EPYC Product Line