The art and science of training neural networks from large data sets in order to make predictions or classifications has experienced a major transition over the past several years. Through popular and growing interest from scientists and engineers, this field of data analysis has come to be called deep learning. Put succinctly, deep learning is the ability of machine learning algorithms to acquire feature hierarchies from data and then persist those features within multiple non-linear layers which comprise the machine’s learning center, or neural network.

Two years ago, questions were mainly about what deep learning is, and how it might be applied to problems in science, engineering, and finance. Over the past year, however, the climate of interest has changed from a curiosity about what deep learning is, and into a focus on acquiring hardware and software in order to apply deep learning frameworks to specific problems across a wide range of disciplines.

The current major deep learning frameworks will be examined here and compared, across various features, such as native language of framework, multi-GPU support, and aspects of usability.

Fairly recently, a major framework was released as open-source: Google’s TensorFlow. IBM is also planning to release its own deep learning software, in cooperation with its OpenPOWER partner NVIDIA. IBM’s framework, however, will be not just be a software framework, but will be an entire open-source platform, consisting of an OS, the open source Apache Spark application for data collection, and SystemML for data analysis. Some of the applications in IBM’s deep learning stack have been around for a number of years, such as SystemML and Apache Spark, while others will be entirely new. Nervana Systems also recently open-sourced its formerly proprietary deep learning software, Neon.

Counting the release of Google’s TensorFlow, Nervana Systems’ Neon, and the planned release of IBM’s deep learning platform, this altogether brings the number of major deep learning frameworks to six, when Caffe, Torch, and Theano are included. This list is not exhaustive, and does not include some frameworks which are very good at solving niche problems for which they were designed, such as Kaldi, the Deep Neural Network Research Software for speech recognition. Theano itself has a number of spin-off frameworks, including Keras, Blocks, and Lasagna, but here these will be grouped under Theano.

| framework | base language | multi-GPU? | pros | cons |

|---|---|---|---|---|

| TensorFlow | Python and C++ | yes | ||

| Torch | Lua | yes | 1.) easy to set up 2.) helpful error messages 3.) large amount of sample code and tutorials |

can be somewhat difficult to set up in CentOS |

| Caffe | C++ | yes | general | |

| Theano | Python | By default, no. Can use more than one but requires a workaround |

1.) expressive Python syntax 2.) higher-level spin-off frameworks 3.) large amount of sample code and tutorials |

error messages are cryptic |

| IBM Deep Learning Platform | SystemML, et al. | expected yes | runs from out of box |

|

| Neon | Python | yes | runs fast |

| framework | related software | execution speed* |

research areas of applicability |

|---|---|---|---|

| TensorFlow | slower than theano & torch |

general | |

| Torch | DIGITS | competitive with Theano | general |

| Caffe | DIGITS | slower than theano & torch |

Image Classification |

| Theano | competitive with Torch | general | |

| IBM Deep Learning Platform |

itself | no common benchmark examined here; Spark has held recent speed records |

general |

| Neon | fastest | general |

*speeds are based upon benchmarks published at convnet-benchmarks on Github

![]()

Deep Learning Frameworks

TensorFlow

While new to the open source landscape, Google’s TensorFlow deep learning framework has been in development for years as proprietary software. It was developed originally by the Google Brain Team for conducting research in machine learning and deep neural networks. The framework’s name is derived from the fact that it uses data flow graphs, where nodes represent a computation and edges represent the flow of information – in Tensor form – from one node to another.

TensorFlow offers a good amount of documentation for installation, as well as learning materials/tutorials which are aimed at helping beginners understand some of the theoretical aspects of neural networks, and getting TensorFlow set up and running relatively painlessly.

Unlike any other framework, TensorFlow has the ability to do partial subgraph computation, which involves taking a subsample of the total neural network and then training it, apart from the rest of the network. This is also called Model Parallelization, and allows for distributed training.

It is worth noting that one of the Theano frameworks, Keras, supports TensorFlow. The author of Keras, François Chollet, has recently ported Keras to TensorFlow. This means the Keras framework now has both TensorFlow and Theano as backends. Keras is a particularly easy to use deep learning framework. Now, any model previously written in Keras can now be run on top of TensorFlow.

In terms of speed, TensorFlow is slower than Theano and Torch, but is in the process of being improved. Future releases will likely see performance similar to Theano and Torch.

Caffe

Caffe was developed at the Berkeley Vision and Learning Center (BVLC). Caffe is useful for performing image analysis (Convolutional Neural Networks, or CNNs) and regional analysis within images using convolutional neural networks (Regions with Convolutional Neural Networks, or RCNNs).

The Caffe framework benefits from having a large repository of pre-trained neural network models suited for a variety of image classification tasks, called the Model Zoo, which is available on Github.

Both Caffe and Torch are used by NVIDIA’s DIGITS open-source deep learning software for image classification. A previous blog post examines DIGITS 2.0 in depth (see Caffe deep learning Tutorial using NVIDIA DIGITS on Tesla K80 & K40 GPUs). Support has since been added for the Torch framework, with the recent 3.0.0 release candidate. Since version 2.0, DIGITS has supported the use of multiple GPUs. DIGITS 3.0 is expected to officially be released within the next month.

Torch

Torch was originally developed at NYU, and is based upon the scripting language Lua, which was designed to be portable, fast, extensible, and easy to use in development. Lua was also designed to have an easy-to-use syntax, which is reflected by Torch’s syntactic ease of use. Torch features a large number of community-contributed packages, giving Torch a versatile range of support and functionality. It has since been incorporated into the PyTorch project.

Torch can import trained neural network models from Caffe’s Model Zoo, using LoadCaffe (see Torch LoadCaffe on Github).

Like, Theano, Torch has its origins in academia and eventually developed a large open source user base. As a result, Torch, like Theano has a large amount of user support, blogs, and supporting documents across the internet and Academic Literature.

Of the frameworks discussed here, Torch is probably the easiest to get up and running, if you are using an Ubuntu platform. If you are using CentOS, workarounds are possible, but require careful modification of OS packages.

Theano

Theano was reviewed in a previous blog post (see Keras and Theano deep learning frameworks). Here, the major aspects of the framework will be reviewed.

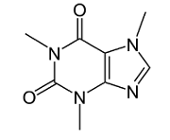

Unlike all other deep learning frameworks, Theano computes the gradient when determining the backpropagation error by deriving an analytical expression. This eliminates accumulation of error during successive derivative calculations using the chain rule, which other frameworks accrue due to their use of numerical methods. Backpropagation with neural networks always involves some amount of stochastic component, in order to avoid getting trapped in a local minima. A noisy or numerical computation might not have too detrimental of an effect on results, at least during the start of the backpropagation, where the stochastic component might be largest, depending on which gradient search method is being used. For search methods which require high accuracy for the gradient in the latter stages of the global minima search, Theano would be expected to produce better results.

Theano’s user base enjoys an extensive volume of tutorials and documentation on how to do just about anything that is currently being done in artificial neural network research.

Theano and Torch have been competitive on execution speed. Benchmarks have been published on Github at convnet-benchmarks. FFTs for computing convolutions first became available in Theano. Computing convolutions using FFTs offers a performance boost over calculating the convolution the traditional way.

Facebook, where Torch is used widely by a deep learning research team led by Yann LeCun, released a Torch library for calculating convolutions using FFTs in early 2015.

Although the framework supports use of multiple GPUs, configuring Theano to use more than one GPU requires a somewhat simple workaround. This workaround is documented on GitHub.

IBM Deep Learning Platform

IBM’s platform is the only deep learning platform/framework examined here which does not consist of single framework. Instead it is a whole platform – from OS to programming frameworks – including Spark, and SystemML (which IBM recently made open source through Apache Incubator). It is expected that IBM will be further developing the platform with its openPOWER partner, NVIDIA.

Apache Spark, a prime component of IBM’s deep learning platform, is designed for cluster computing and contains MLlib, a distributed machine learning framework. MLlib works with the distributed memory architecture of Spark. MLlib contains many common machine learning algorithms and statistical tools.

SystemML, another prime component of IBM’s platform, features a declarative, high level, R-like syntax, with built-in linear algebra primitives, statistical functions, and constructs specific to machine learning. SystemML scripts can execute within a single SMP enclosure, or they can run across multiple nodes in a distributed computation with Hadoop’s MapReduce, or Apache Spark.

Spark and SystemML work together such that Spark collects data via Spark Streaming and gives it some representation, while SystemML determines the best algorithms for analyzing the data, given the cluster’s layout and configuration. One of the platform’s distinguishing features is that it will automatically optimize data analysis, according to the data and cluster characteristics, ensuring efficiency and scalability. Other deep learning frameworks do not make such optimization decisions for the user. IBM’s platform provides some hidden layer which selects the best method for analysis.

SystemML was recently accepted as an Apache Incubator project, accelerating the development of the open source framework. Spark previously was also donated to Apache by the AMPLab (Algorithms, Machines, and People Lab), at the University of California, Berkeley.

Neon

Adding to the growing trend of proprietary machine learning software being turned into open source projects, Nervana Systems, now an Intel subsidiary, has open sourced Neon, its deep learning framework, which has recently been ranked as the fastest framework across several performance categories (see benchmarks).

Written in Python, Neon has a syntax similar to Theano’s high-level frameworks (e.g., Keras). With Google and now IBM releasing their deep learning frameworks as open source, the decision by Nervana Systems makes sense: open a proprietary framework to keep it vital, or risk it becoming demoted in relevance and possibly eventually losing it to the dust bin of obscurity.

Neon features a Machine Learning Operations (MOP) Layer which enables other deep learning frameworks to work with Nervana’s Neon. Nervana’s primary goal is to create a hardware platform built especially for deep learning. Nervana could then offer cloud-based deep learning applications running on their specialized hardware platform.

More to Come

Aside from the deep learning frameworks discussed here, more are certain to appear as open source in the near future. A number of startups and established software companies have been developing their own deep learning frameworks and applications. These companies include Microsoft (Distributed Machine Learning Toolkit), Intel, Netflix, Pandora, Spotify, and Snapchat. Lesser known startups include Teradeep, Enlitic, Ersatz Labs, Clarifai, MetaMind, and Skymind. New emerging frameworks will likely offer unique features.

7 Responses to Deep Learning Frameworks: A Survey of TensorFlow, Torch, Theano, Caffe, Neon, and the IBM Machine Learning Stack