Platforms

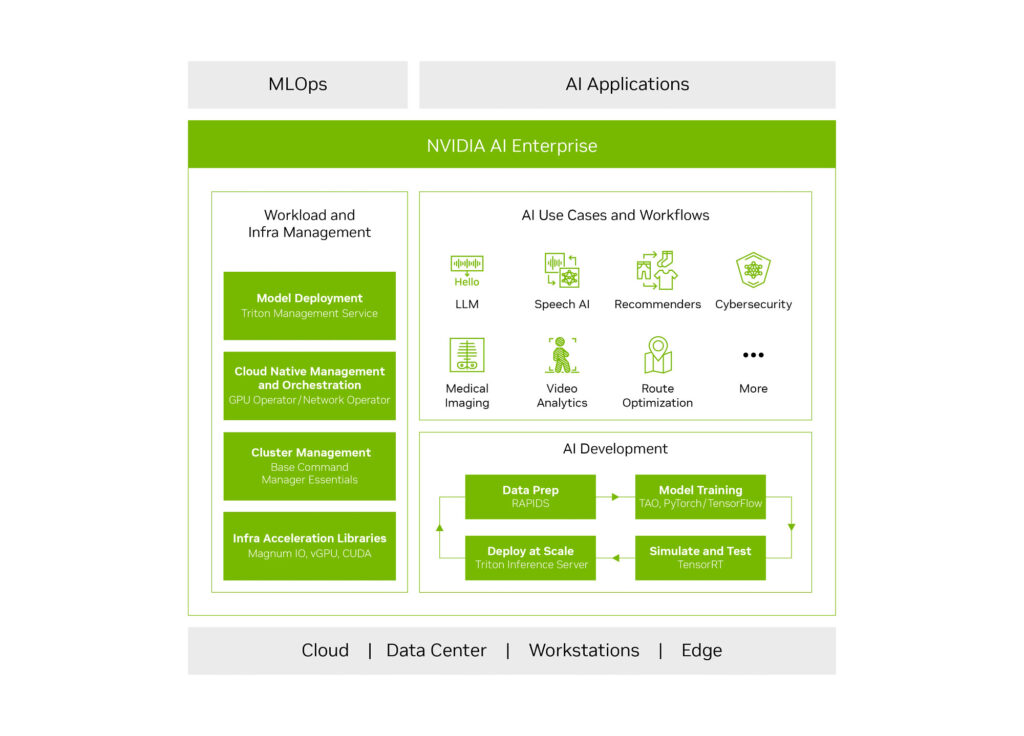

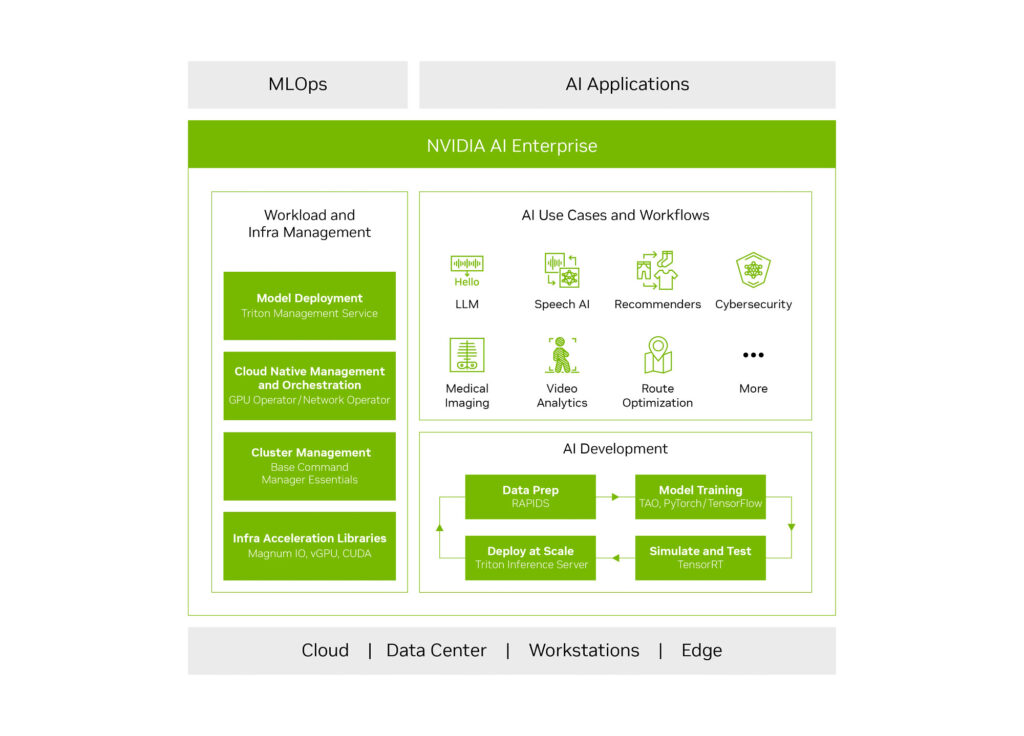

NVIDIA AI Enterprise Software Platform

NVIDIA AI Enterprise is a secure, end-to-end, cloud native software platform that accelerates the data

science pipeline and streamlines development and deployment of production-grade AI applications,

including generative AI, computer vision, speech AI, and more. By deploying AI Enterprise on a NVIDIA Certified system delivered by Microway, you can harness the power of AI, even if you don’t have AI expertise today.

Why NVIDIA AI Enterprise?

Accelerating Enterprise AI While Lowering TCO

NVIDIA AI software optimizes the performance of accelerated computing for every step of the AI workflow. The improvements in performance result in better utilization of computing resources, so fewer servers are needed to support the same AI workload.

Enterprise-Grade

As AI rapidly evolves and expands, the complexity of the software stack and its dependencies grows. NVIDIA AI Enterprise addresses the complexities organizations face when building and maintaining a high-performance, secure, cloud-native AI software platform.

Deploy Everywhere

The NVIDIA AI Enterprise software is containerized to allow portability for enterprises who need a consistent environment irrespective of where they choose to deploy AI. It is available everywhere from public cloud, OEM systems, workstations, data centers, to the NVIDIA DGX platform.

Speeds the Time to Production of Generative AI Applications

NVIDIA AI workflows built into NVIDIA AI Enterprise can accelerate the path to delivering AI outcomes, reduce time to deployment, lower costs, and improve accuracy and performance.

ON PREM SYSTEM OPTION

NVIDIA Certified Servers from Microway for AI Enterprise

NVIDIA-Certified Systems™ are validated for peak performance on NVIDIA AI applications platform-by-platform. Most of Microway’s offerings are Certified.

eBook: The Rise of Enterprise AI: a Guide for the IT Leader

Learn about the barriers to AI adoption, building your IT strategy for AI, implementing AI at enterprise scale, and how to fast track your AI journey.

FREE TRIAL

NVIDIA AI Enterprise

NVIDIA AI Enterprise is available with a free 90-day evaluation license for users with existing systems. Or, complete a hands-on lab in NVIDIA Launchpad to get familiar with the toolchain.

- NVIDIA AI Enterprise is containerized software requiring a container-ready workload orchestration (VMware Tanzu, Red Hat OpenShift, more)

- Trial License requires supported hardware and software instances

- Microway experts can assist in architecting an NVIDIA-Certified Systems™ deployment upon request