AI Inference

AI Inference

Microway Solutions for AI Inference

Deploy exceptional infrastructure for AI Inference. Microway offers inference solutions spanning from single systems (edge or datacenter) up to multi-rack clusters.

Custom Microway

Model Refinement

- WhisperStation with NVIDIA RTX PRO™ GPUs

- 1-4 NVIDIA RTX PRO Blackwell GPUs

- Expertly integrated at the factory by Microway —arrives ready to run real workloads

- Optional NVIDIA AI Enterprise software integration

- Outstanding for RAG workloads

Edge Inference

- NumberSmasher or Navion 1-2U GPU servers

- NVIDIA L4 or RTX PRO GPUs

- Enabled with NVIDIA NIM™ microservices, NVIDIA Dynamo or Triton, NVIDIA NeMo™, and NVIDIA AI Enterprise (optional)

Datacenter Scale-Up Inference

- Increased performance to support large user counts or increased token sizes

- NumberSmasher or Navion 2U -10u GPU based upon NVIDIA-Certified Servers

- NVIDIA L4, L40S, H200 NVL, or B200 GPUs

- Enabled with NVIDIA NIM microservices, NVIDIA Dynamo or Triton, NVIDIA NeMo, and NVIDIA AI Enterprise (optional)

- Expertly integrated at the factory by Microway —arrives ready to run real workloads

Scale-Out Inference

TensorSmasher- single/dual rack AI Cluster

- Massive user counts, serving multi-modal models, or immense token size

- 4x+ Microway Octoputers with NVIDIA HGX H200 or Octoputers with NVIDIA HGX B200

- Enabled with NVIDIA NIM microservices, NVIDIA Dynamo, and NVIDIA AI Enterprise

- Fully configurable cluster – servers, network, storage, fabric, software — scales up from hundreds of billions of parameters

- Delivered fully integrated by Microway with optional NVIDIA AI Enterprise and NVIDIA Base Command AI workflow management

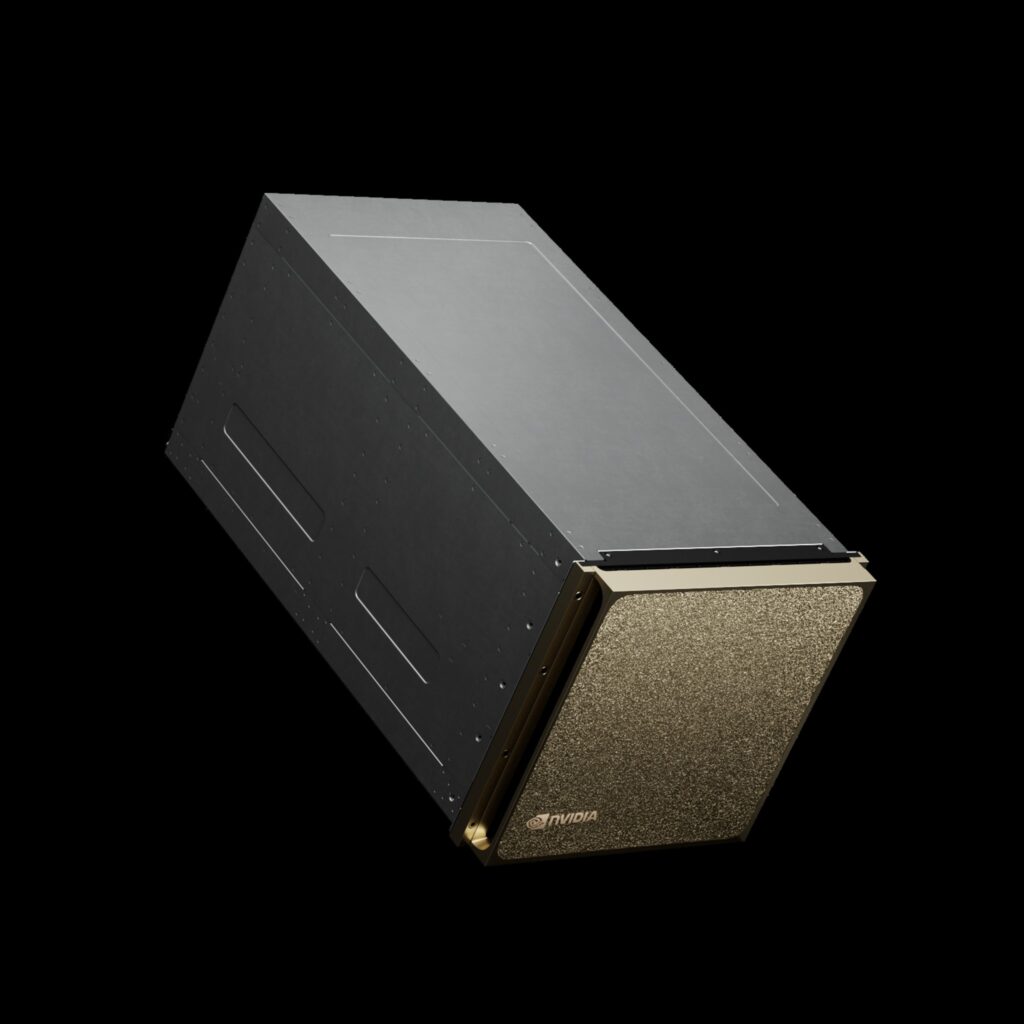

Powered by the NVIDIA DGX platform

First Scaled AI Inference Deployment

- NVIDIA DGX B200 -The Foundation for Your AI Factory

- 8 NVIDIA B200 Tensor Core GPUs

- Delivered by Microway with NVIDIA DGX Software Bundle, which includes NVIDIA Base Command and NVIDIA AI Enterprise

- Configured to deploy NVIDIA NIM™ microservices, NVIDIA Dynamo or Triton, and NVIDIA NeMo™

- Comes with onsite installation and runs jobs the day we leave

Production AI Inference

- NVIDIA DGX BasePOD™ Rack-scale AI with multiple DGX systems & parallel storage

- 32 NVIDIA H200 or B200 GPUs

- Fixed NVIDIA configuration/reference architecture

- Delivered by Microway with NVIDIA DGX Software Bundle, which includes NVIDIA Base Command and NVIDIA AI Enterprise

- Runs NVIDIA NIM microservices, NVIDIA Dynamo and NVIDIA NeMo

- Comes with onsite installation and runs jobs the day we leave

AI Center of Excellence

- NVIDIA DGX SuperPOD™ – The World’s First Turnkey AI Data Center

- 256+ NVIDIA H200 GPUs or 256+ NVIDIA B200 GPUs

- Fixed NVIDIA configurations (multiples of 32 node Scalable Units)

- Delivered by Microway with NVIDIA Base Command or NVIDIA Mission Control and NVIDIA AI Enterprise

- Runs NVIDIA NIM microservices, NVIDIA Dynamo and NVIDIA NeMo

- Custom-tailored onsite installation and white-glove bringup program

Why Select Microway for AI Inference Deployments?

Runs Code the Day it Leaves Microway

Solutions integrated by Microway run applications immediately after delivery. That’s how we test them: with real jobs.

Unmatched Burn-In Testing

We burn-in test with applications that stress every GPU memory block.

NVIDIA AI Software Integration

Microway experts can install and integrate NVIDIA AI Enterprise, any NGC container, NVIDIA Dynamo, NVIDIA NeMo™, and NVIDIA NIM™ microservices.

Architected by Experts and Backed by Microway Technical Support

Our sales engineers have extensive expertise in architecting AI solutions and our technical support team used to integrate GPU clusters. Never deal with a Tier 1 OEM “generalist” again.