There is a lot of material available on RAID, describing the technologies, the options, and the pitfalls. However, there isn’t a great deal on RAID from an HPC perspective. We’d like to provide an introduction to RAID, clear up a few misconceptions, share with you some best practices, and explain what sort of configurations we recommend for different use cases.

What is RAID?

Originally known as Redundant Array of Inexpensive Disks, the acronym is now more commonly considered to stand for Redundant Array of Independent Disks. The main benefits to RAID are improved disk read/write performance, increased redundancy, and the ability to increase logical volume sizes.

RAID is able to perform these functions primarily through striping, mirroring, and parity. Striping is when files are broken down into segments, which are then placed on different drives. Because the files are spread across multiple drives that are running in parallel, performance is improved. Mirroring is when data is duplicated on the fly across drives. Parity within the context of RAID refers to when data redundancy is distributed across all drives so that when one or more (depending on the RAID level) drives fail, the data can be reconstructed from the remaining drives.

RAID comes in a variety of flavors, with the most common being the following.

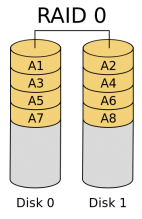

RAID 0 (striping)

RAID 0 (striping)

Redundancy: none

Number of drives providing space: all drives

The riskiest RAID setup, RAID 0 stripes blocks of data across drives and provides no redundancy. If one drive fails, all data is lost. The benefits of RAID 0 are that you get increased drive performance and no storage space taken up by data parity. Due to the real risk of total data loss, though, we normally do not recommend RAID 0 except for certain use cases. A fast, temporary scratch volume is one of these exceptions.

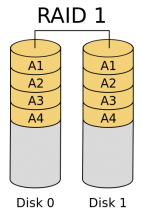

RAID 1 (mirroring)

RAID 1 (mirroring)

Redundancy: typically 1 drive

Number of drives providing space: typically n-1

RAID 1 is in many ways the opposite of RAID 0. Data is mirrored on all disks in the RAID array so if one or more drives fail, as long as at least one half of the mirror is functioning, the data remains intact. The main downside is that you realize only half the spindle count in usable space. Some performance gains can be realized during drive read, but not during write. We usually recommend that operating systems be housed on 2 drives in RAID 1.

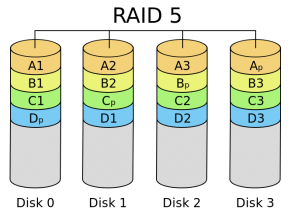

RAID 5 (single parity)

RAID 5 (single parity)

Redundancy: 1 drive

Number of drives providing space: n-1

Next we have the very common but very misunderstood RAID 5. In this case, data blocks are striped across disks like in RAID 0, but so are parity blocks. These parity blocks allow the RAID array to still function in the event of a single drive failure. This redundancy comes at the cost of losing a single drive’s worth of space. Due to the striping similarity that it shares with RAID 0, RAID 5 enjoys increased read/write performance.

The misconception concerning RAID 5 is that many people think that single-drive parity is a robust safeguard again data loss. Single-drive parity becomes very risky after a drive fails and you wait to or start to rebuild your array. The increased IO activity of a rebuild is exactly the type of situation likely to create a second drive failure, and no protection remains.

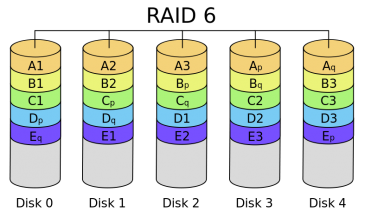

RAID 6 (double parity)

RAID 6 (double parity)

Redundancy: 2 drives

Number of drives providing space: n-2

RAID 6 builds upon RAID 5 with a second parity block, tolerating two drive failures instead of one. Generally we find that losing a second disk’s worth of capacity is a fair tradeoff for the increased redundancy and we often recommend RAID 6 for larger arrays still requiring strong performance.

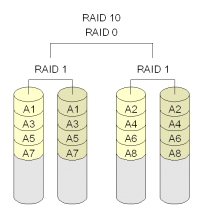

RAID 10 (striped mirroring)

RAID 10 (striped mirroring)

Redundancy: 1 drive per volume in the span

Number of drives providing space: n/2

As you can see from the diagram, RAID 10 is a combination of RAID 1 mirroring and RAID 0 striping. It is, in essence, a stripe of mirrors, so creating a RAID 10 is possible with even drive-counts greater than 2 (i.e. 4, 6, 8, etc.). RAID 10 can offer a very reasonable balance of performance and redundancy, with the primary concern for some users being the reduced storage space. Since each volume in the RAID 0 span is made up of RAID 1 mirrors, a full half of the drives are used for redundancy. Also, while the risk of data loss is greatly reduced, it is still possible. If multiple drives within one volume fail at the same time, information could be lost. In practice, though, this is uncommon, and RAID 10 is normally considered to be an extremely secure form of RAID.

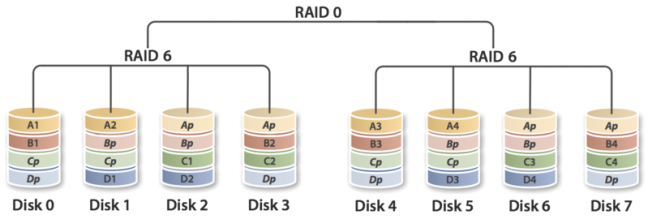

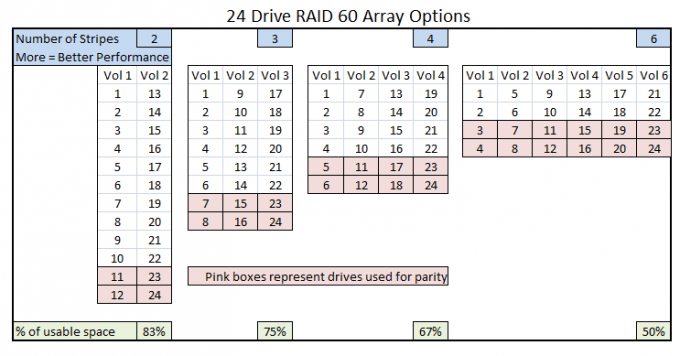

RAID 60 (striped double parity)

Redundancy: 2 drives per volume in the span

Number of drives providing space: n-2 * number of spans

RAID 60 is a less common configuration for many consumers but sees a lot of use in enterprise and HPC environments. Similar in concept to RAID 10, RAID 60 is a group of RAID 6 volumes striped into a single RAID 0 span. Unlike most common RAID 10 configurations, where each pair of drives may add another volume to the RAID 10 span, administrators have control over the number of RAID 6 volumes within the RAID 60 span. For example, 24 drives can be arranged in four different RAID 60 configurations:

Valid configurations are those that meet the following two criteria: 1) The number of drives has to be evenly divisible by the number of volumes and 2) Each volume can be no fewer than four drives (the minimum required for a RAID 6 volume). As the number of volumes increases, so does the redundancy and performance, but also the amount of wasted space. Each usage case is unique, but the rule of thumb for most users is a drive number of between 8 and 12 per volume. As the chart indicates, the higher the number of stripe volumes, the less usable capacity you have. More stripe volumes does improve performance, however. Note that the example with six stripe volumes has the same capacity as a RAID 10 volume and thus would be better served by such a configuration.

RAID 50 (striped single parity)

Redundancy: 1 drive per volume in the span

Number of drives providing space: n-1 * number of spans

It’s worth mentioning that RAID 50 configurations have very similar structures to RAID 60, only with striped RAID 5 volumes instead of RAID 6. RAID 50 does improve slightly on RAID 5’s redundancy characteristics, but we still don’t always recommend it. Consequently, RAID 60 configurations are far more common for our customers.

Conclusion

If you prefer a visual review of these concepts, the Intel RAID group has produced a strong video:

There are other RAID configurations, but those listed above are the most common. If you have other questions about storage or other HPC topics, be sure to contact us below.