NVIDIA Datacenter GPU Solutions from Microway

NVIDIA GPUs are the leading acceleration platform HPC and AI. They offer overwhelming speedups compared to CPU only platforms on thousands of applications, simple directive-based programming, and the opportunity for custom code. As an NVIDIA NPN Elite partner, Microway can custom architect a bleeding-edge GPU solution for your application or code.

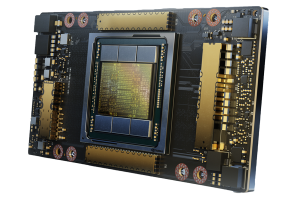

NVIDIA H100 GPUs

The NVIDIA H100 Tensor Core GPU powered by the NVIDIA Hopper™ architecture provides the utmost in GPU acceleration for your deployment and groundbreaking features

- A dramatic leap in performance for HPC

Up to 34 TFLOPS FP64 double-precision floating-point performance (67 TFLOPS via FP64 Tensor Cores) - Unprecedented performance for Deep Learning

Speedups of up to 9X for AI training and up to 30X for inference with Transformers, approaching 2 PFLOPS/4PFLOPS performance - 2nd Gen Multi Instance GPU (MIG)

Provides up to 7X the secure tenants for more, fully isolated isolated applications - Fastest GPU memory

80GB of HBM3 operating at up to 3.35TB/s - Class-leading connectivity

PCI-E Gen 5 and for up to 128GB/sec of transfer BW to DPUs or InfiniBand and 4th-generation NVLink for up to 900GB/sec of GPU-GPU communication

NVIDIA L40S GPUs

NVIDIA L40S GPUs are outstanding general purpose GPU accelerators for a variety of AI & Graphics workloads

- Outstanding Graphics and FP32 performance

Up to 91.6 TFLOPS FP32 single-precision floating-point performance - Excellent Deep Learning throughput

362.05 TFLOPS of FP16 Tensor Core (733 TFLOPS with sparsity) AI performance; 733 TOPS Int8 performance (1,466 TOPS with Sparsity) - Large, Fast GPU memory

1.2-1.7X the AI training performance of NVIDIA A100

NVIDIA A100 GPUs

NVIDIA A100 “Ampere” GPUs provide advanced GPU acceleration for your deployment and offer advanced features

Note: NVIDIA announced an End of Sale Program for NVIDIA A100 with last time forecasts in late Feb 2024. Unless you are augmenting an existing NVIDIA A100 deployment, we recommend transitioning to NVIDIA H100 or NVIDIA L40S GPUs.

- Multi Instance GPU (MIG)

Allows each A100 GPU to run seven separate & isolated applications or user sessions - Strong HPC performance

Up to 9.7 TFLOPS FP64 double-precision floating-point performance (19.5 TFLOPS via FP64 Tensor Cores) - Excellent Deep Learning throughput

Speedups of 3x~20x for neural network training and 7x~20x for inference (vs Tesla V100) and new TF32 instructions - Large, Fast GPU memory

80GB of high-bandwidth memory operating at up to 2TB/s - Faster connectivity

3rd-generation NVLink provides 10x~20x faster transfers than PCI-Express

NVIDIA A30 GPUs

NVIDIA A30 “Ampere” GPUs offer versatile compute acceleration for mainstream enterprise GPU servers

- Amazing price-performance for HPC compute

Up to 5.2 TFLOPS FP64 double-precision floating-point performance (10.3 TFLOPS via FP64 Tensor Cores) - Strong AI Training & Inference Performance

approximately ~50% of the FP16 Tensor FLOPS of an NVIDIA A100 and support for TF32 instructions - Large and fast GPU memory spaces

24GB of high-bandwidth memory with 933GB/s of memory bandwidth - Multi Instance GPU (MIG)

Allows each A30 GPU to run four separate & isolated applications or user sessions - Fast connectivity

PCI-Express Gen 4.0 interface to host and 200GB/sec 3rd Gen NVLink Interface to neighboring GPUs

Why NVIDIA Datacenter GPUs?

NVIDIA Datacenter GPUs (formerly Tesla GPUs) have unique capabilities not present in consumer GPUs and are the ideal choice for professionals deploying clusters, servers, or workstations. Unique features to NVIDIA’s datacenter GPUs include:

Full NVLink Capability, Up to 900GB/sec

Only NVIDIA Datacenter GPUs deploy the most robust implementation of NVIDIA NVLink for the highest bandwidth data transfers. At up to 900GB/sec per GPU, your data moves freely throughout the system and nearly 14X the rate of PCI-E x16 4.0 GPUs.

Unique Instructions for AI Training, AI Inference, & HPC

Datacenter GPUs support the latest TF32, BFLOAT16, FP64 Tensor Core, Int8, FP8 instructions that dramatically improve application performance.

Unmatched Memory Capacity, up to 80GB per GPU

Support your largest datasets with up to 80GB of GPU memory, far greater capacity than available on consumer offerings.

Full GPU Direct Capability

Only datacenter GPUs support the complete array of GPU Direct P2P, RDMA, and Storage features. These critical functions remove unnecessary copies and dramatically improve data flow.

Explosive Memory Bandwidth up to 3TB/s and ECC

NVIDIA Datacenter GPUs uniquely feature HBM2 and HBM3 GPU memory with up to 3TB/sec of bandwidth and full ECC protection.

Superior Monitoring & Management

Full GPU integration with the host system’s monitoring and management capabilities such as IPMI. Administrators can manage datacenter GPUs with their widely-used cluster/grid management tools.